⭒ Who Watches the Watchmen? When Vulnerability Discovery Outpaces Repair Capacity

I saw the FFmpeg incident blow up recently in the infosec community.

I don't see it covered in non-technical news outlets, but I think it opens an urgent enough question that will shape the future of software security.

So, here's my attempt at describing the incident to a broader audience, as someone who believes in the promise of AI security research, while also wishing to protect the sustainability of open source.

TL;DR: If you take one thing from this post, it's that AI security research has scaled to the point where we can discover vulnerabilities in spades, but our capacity to fix them hasn't caught up. The rest of this post is my thoughts on how the responsibilities of security researchers and open-source maintainers should evolve in response to this transition.

What Horse Do I Have In This Race?

To avoid this being "yet another rehash of the FFmpeg incident," I think it'd be worth talking about my personal stake in these incidents.

My Relationship With Security

My relationship with security is a pretty complicated one. I guess, the really short answer is that I was really into security competitions at one point, I learned more about exploiting memory safety issues than I could've asked for, and then I went on a detour to learn about topics outside of security, and now I'm back in security.

By day, I'm an engineer on a security team at Big Tech. In my evenings and weekends, I do independent experiments in AI security.

The niche that I'm interested in is AI Security, how AI will transform the field of security. In the past year, late in 2024, my interest in AI and security was reignited when I came across more and more independent work regarding the use of AI in security research, both by friends participating in AIxCC and founding companies in this space, that convinced me that AI will and is already transforming the field of security.

I enjoy security, and even if (or when) AI "solves" security, some part of me will still identify as a security person. I believe there's no such thing as "solving" security, that our systems get stronger and harder to break, but we don't ever "solve" security.

And I believe that as long as one has a curiosity for understanding how systems work and the creativity to use that knowledge, they are a security person. That identity persists even as we go up higher in the level of abstraction, even as our tools shift from low-level exploits and hardcore analysis to plain English prompts for models, the core of what we do remains the same.

...And My Relationship With Open Source

One thing that has changed ever since I started working in my first job out of college, is that the question of maintainability appears in my conscious mind a lot more, and this affects my relationship with open source software.

As an individual working on personal projects, my criteria for choosing what technologies to use in my projects is:

- "Is this a cool piece of technology to work with?"

- "What are the technical merits of this technology?"

- "Do I like the maintainers of this technology?"

Naturally, I love open source technology. Anything maintained by a gang of teenagers, a motley crew of software enthusiasts, or "just a guy with an anime/catgirl profile picture" on Github are fair game, and in fact what I like to see and play with in the ecosystem.

As an engineer developing software on behalf of a large company, I find that I operate on a wildly different criteria from before. The questions I now ask are:

- "How responsive are the maintainers of this technology?"

- "Do we have neighborhood support for this technology?"

- "Who can I hand it this project off to when I transition off?"

The most pivotal moments that surfaced these questions in my conscious mind are these:

- On any occasion that we bring in an external dependency, especially it's open source, and especially if it has github.com in its URL, the common concern and ensuing discussion is, "What security problems does this external dependency come with? Do we trust its maintainers to keep up with security updates? How much burden do we have to fork it and submit patches?"

- On another occasion, my pivoting away from an open-source library in favor of a different open-source package that's maintained by Big Tech employees, with the rationale that since the employees are paid to review requests for these packages, they'll be more responsive to security updates and feature requests. That the former, while actively maintained, depends on volunteers who may have varying availability and competing priorities, with the risk of burnout or life changes affecting development pace and responsiveness.

- On another occasion, my pivoting away from Rust and rewriting my project in Go, due to learning that the new joinees of the team that I could transfer my project to had more Go expertise, that there were simply more Go reviewers to go around (no pun intended), and that our team and sister teams had more neighborhood support for Go.

All of these moments encapsulate some pretty important themes:

- to what extent should volunteers be responsive to security reports on software that they maintain?

- to what extent should a Big Tech employee take on the responsibility of submitting patches to open source software that they use?

- the problem of knowledge transfer when the sole maintainer(s) step away from the project

Now, as a reader reading these questions, you probably have some simple, instant, binary yes/no answers forming in your head. "Of course maintainers should be responsive to security reports!" or "Of course the Big Tech employee should submit patches!" or "It's not the Big Tech employee's job, they have other priorities, they should just open the request and wait for the maintainers to handle it," or something along those lines.

Keep these binary yes/no answers in mind as we discuss the FFmpeg incident.

Okay, now to talk about FFmpeg

You've most definitely crossed paths with FFmpeg, if not directly, then countless applications built on top of it. FFmpeg is the invisible backbone that handles video and audio processing across the internet. Every time you stream a video, convert a file format, or watch content on platforms like YouTube, FFmpeg is likely working behind the scenes.

What's remarkable is that FFmpeg is entirely the result of volunteers. Despite having a reach so vast that its leadership could have monetized it, they chose to keep it free and open-source.

Now, AI agents have gotten really good at finding security vulnerabilities. My earliest observation of people using AI in security research had been in fall of 2024, when Google used their "Big Sleep" AI agent to find a real-world vulnerability.

By summer 2025, the capability had clearly matured. In July 2025, Google announced they'd scaled up Big Sleep and found over 20 vulnerabilities across multiple open-source codebases.

And, as you've guessed, one of those codebases was FFmpeg.

Why Maintainers Are Upset

Now, you'd imagine it's a good and wonderful thing when a security researcher points out a security flaw in your system, so you can keep it secure. But...

What's going on?

The reason for the upset is due to a fundamental mismatch in expectations vs reality of volunteer-maintained open-source projects.

First, for context on the 90-day disclosure policy: when Project Zero discovers a vulnerability, the vendor has 90 days to develop and release a fix. If the vendor patches the bug before the 90-day deadline, they get an additional 30 days for users to adopt the patch before Project Zero publicly discloses the technical details.

This is all good and reasonable, but it coincides with another development to the 90-day disclosure policy, which is the new Reporting Transparency policy. In short, this policy states:

Beginning today, within approximately one week of reporting a vulnerability to a vendor, we will publicly share that a vulnerability was discovered.

The new Reporting Transparency policy creates a public countdown timer on volunteer-maintained projects. If the maintainers don't patch the vulnerability within 90 days, Project Zero will publicly disclose the full technical details of the vulnerability, including information that could help others exploit it.

The Burden on Maintainers

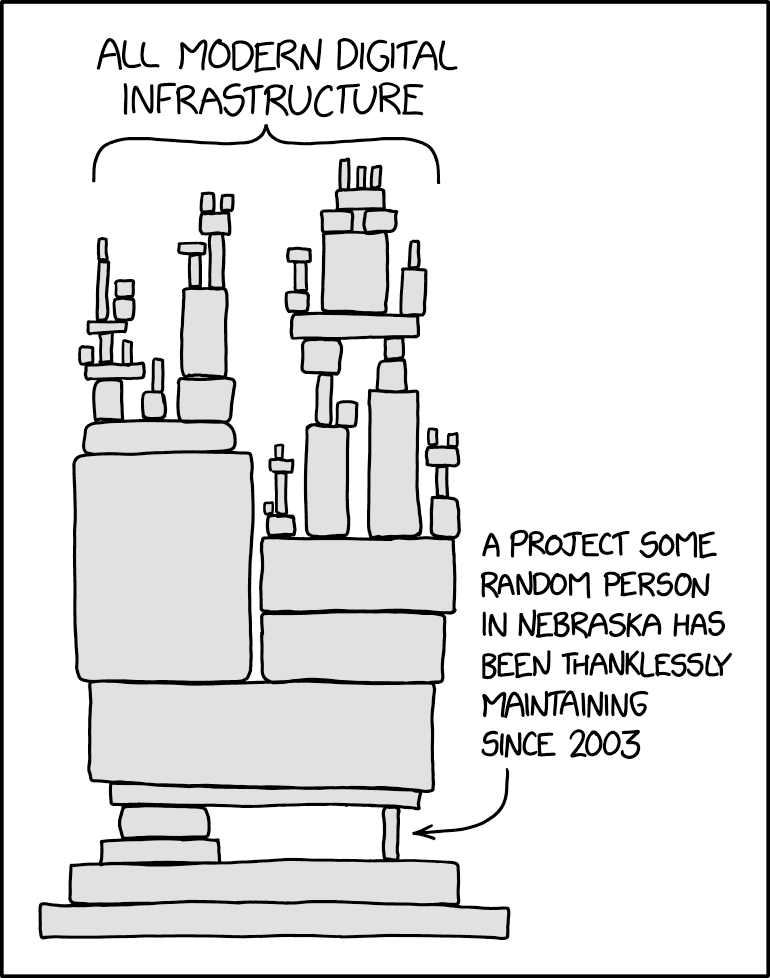

This creates an asymmetric pressure situation. FFmpeg is maintained entirely by volunteers with day jobs, families, and other commitments. These aren't employees who can be assigned to drop everything and fix security issues on a timeline. They're unpaid contributors who built and maintain critical infrastructure that powers much of the internet for free, simply because they care for the software.

The public disclosure essentially puts them on a visible timer, creating external pressure and potential reputational damage if they can't meet an externally imposed deadline. The security researchers benefit from demonstrating their AI capabilities and improving their own security posture, while the burden of fixing the vulnerabilities falls entirely on volunteers working in their spare time.

To put this in concrete numbers, Google's Big Sleep agent submitted at least 13 vulnerability reports to FFmpeg. FFmpeg currently has 22 active maintainers, each responsible for different components of the codebase. At any given time, these volunteers are juggling on feature requests and fixing functional bugs that their users asked for, the kind that affects the end user experience.

Professional security organizations like Google's Project Zero follow responsible disclosure practices and work collaboratively with maintainers. But, the democratization of AI security tools means anyone can now scan for vulnerabilities and file reports, regardless of their understanding of the security landscape or the burden their reports place on volunteers.

All of these bugs get treated as highest priority, pulling volunteers away from the features and improvements their users asked for. Who helps the volunteers with patching these bugs? Not the users, they lack the context around these edge cases that may never affect their workflows. Not the AI agent, it can identify vulnerabilities but can't navigate the nuances of FFmpeg's architecture to craft patches that won't break existing functionality or introduce new issues. The volunteers are left alone to shoulder the burden of remediation, while the security researchers move on to scan the next project.

The Case of libxml2

FFmpeg isn't alone in feeling this pressure. Libxml2, an XML parser and toolkit used across countless applications and operating systems, faced a similar breaking point just months earlier.

Like FFmpeg, libxml2 is foundational infrastructure. It's used by Apple across all their operating systems, by Google, by Microsoft, and countless other applications.

Also like FFmpeg, it's maintained by volunteers, or rather, it was primarily maintained by one volunteer: Nick Wellnhofer.

Nick Wellnhofer had stepped up to take the mantle as its de facto maintainer after its original creator, Daniel Veillard, faded from the project in the early 2020s.

Veillard had started libxml2 as a passion project, but after 20+ years of fielding bug reports and feature requests and complaints of infrequent releases, he wanted more freedom to work on other things aside from libxml2.

And so Veillard was grateful to transfer the responsibility to Wellnhofer.

It would not take long for Wellnhofer to understand why Veillard had been so enthusiastic about the handoff. What Wellnhofer didn't yet realize was that he wasn't inheriting a project; he was inheriting an unpaid support contract with the entire tech industry.

In May 2025, Wellnhofer announced he was spending several hours each week dealing with security issues, time he couldn't sustain as an unpaid maintainer.

Wellnhofer stated that he would reject security embargoes entirely. Going forward, security issues would be treated like any other bug, made public as soon as they were reported and fixed whenever he had time.

Full article at LWN.net. I recommend reading the full piece for more context on the libxml2 situation.

And finally, in September 2025, Wellnhofer announced that he would step down as the sole maintainer of libxml2. As of writing this post, libxml2, the project that powers critical infrastructure across the entire tech industry, is left without a maintainer.

As of November 2025, libxml2 remains orphaned. It is just one of many casualities in the ecosystem. There are many volunteer-driven projects powering much of our critical infrastructure, not just FFmpeg, that are headed for the same fate.

We must rethink how security research and open-source maintenance intersect. The current model, where AI scales our ability to find vulnerabilities exponentially while volunteer capacity to fix them stays flat, is unsustainable.

So, what do we do?

The most salient point for me as I looked at the debates around the FFmpeg and libxml incidents, is the statement that "wealthy corporations" should help by becoming maintainers. These companies have the resources and engineering talent to maintain the infrastructure they depend on.

This is already a common practice in industry. Big corporations are known to assemble teams of paid employees to drive development of open-source projects. We see this with Meta for PyTorch, Google for Kubernetes and TensorFlow, and Microsoft for TypeScript. It has been very good for the ecosystem.

I agree with these sentiments, but what's different about AI-driven security research is that corporate backing alone won't solve the problem. Even if every critical open-source project had a well-funded team, AI-automated vulnerability discovery will increase the load on those teams faster than we can staff them.

This is not just a structure issue, but also a scaling issue. The tools for finding vulnerabilities have evolved exponentially with AI, but our human capacity to fix them hasn't.

How do we prevent tragedy of the commons? If AI slop makes maintaining OSS unsustainable, what happens to critical infrastructure?

My Initial Thoughts on Responsibilities

I want to be upfront: these are my initial thoughts, not confident prescriptions. This is a complex problem that sits at the intersection of security, sustainability, and community norms. After reflecting on both my background in security and my experience as an engineer concerned with maintainability, this is where I currently stand.

My opinions are very likely to change as I progress in my career and interact with more perspectives. I marked the spiciest takes with 🌶️, the opinions most likely to age poorly. But that's what blog posts are for, for posterity, not prophecy, and this ship has sailed.

Keep the disclosure timeline

🌶️ I believe the principle behind disclosure timelines is sound. If a vulnerability isn't fixed within a reasonable timeframe, then yes, its details should be disclosed publicly. Yes, this makes it easy for bad actors to exploit it, but it also crowdsources the responsibility of fixing it, especially if the core group of volunteers is already overloaded.

Once disclosed, it's not just the original maintainers tasked with fixing it. Any user who cares enough about the vulnerability can and should step in to help.

🌶️ However, the bug reporter should be sympathetic to project resources when imposing a "reasonable timeframe"; whether the project has paid maintainers, how many active contributors it has, and the complexity of the codebase. If the project is understaffed and run by unpaid volunteers, then the bug reporter should share the responsibility of patching to ensure that the team fixes within this reasonable timeframe.

Distinguish between professional and casual reporting

The accessibility of AI security tools has created two distinct classes of vulnerability reporters. Professional security researchers, whether working for organizations like Google's Project Zero or independent consultants, typically understand the full context of what they're reporting. They know how to assess severity, can distinguish between theoretical and practical exploitability, and understand the maintenance burden their reports create.

But AI has lowered the barrier to entry so dramatically that anyone can now run a scanner and file reports. Many of these casual reporters lack the security expertise to properly evaluate what they've found. They may not understand whether a vulnerability is actually exploitable, whether it represents a real security risk, or how to communicate it effectively. They're essentially running a tool and forwarding the output to maintainers without adding any human judgment or analysis.

This distinction matters because the two groups create fundamentally different burdens on maintainers. Professional researchers typically file high-signal reports and often collaborate on fixes. Casual reporters, even with good intentions, often generate noise that drowns out the signal.

Raising the bar for vulnerability reports

The era of AI CVE hunters is breaking down our traditional reporting model. Volunteers are drowning in reports, many of which are false positives, duplicates, or obscure edge cases that would never be exploitable in practice. This is already such an issue that the open source project cURL has started cracking down on false reports that were generated by AI.

The flood of low-quality reports from casual reporters creates a critical problem: it drowns out the signal from professional security researchers. When maintainers are overwhelmed with noise, even high-quality reports from experienced researchers risk getting lost and delayed in triage. The casual reporters have inadvertently made everyone's job harder, including the professionals doing legitimate security work.

🌶️ My provisional answer is that the bar should be raised for reporting a vulnerability, and this applies to everyone, both casual reporters and professional security researchers. Simply running an AI scanner and forwarding its output should never be acceptable.

Historically, when someone reports a vulnerability, we leave it to the maintainer to patch it. This argument is straightforward: the maintainer has the best context around their codebase, understands the architecture, and knows the tradeoffs.

This was fine in a pre-AI world, when vulnerability reports were relatively rare and came primarily from people with security expertise.

But that world no longer exists. When maintainers are drowning in reports, professional researchers need to differentiate themselves from the noise by proposing patches and actively working with maintainers to arrive at solutions. This isn't just about being helpful, it's about making your legitimate security work visible and actionable amid the flood of AI-generated reports.

I've seen pushback from some security researchers on this proposal: "Patching isn't my area of expertise," or "I find bugs, fixing [bleeping expletive] code is the maintainer's job," or "I'm not skilled enough to write patches." I understand this perspective, but I think it misses something fundamental about what security research actually requires.

🌶️ If you've invested enough effort to prove a bug is genuinely dangerous, if you've built a working exploit, traced through the code paths, understood the data flow well enough to craft a proof-of-concept, then you've already demonstrated that you can understand the system deeply enough to propose a fix. The very act of crafting an exploit proves you can deeply understand a system's internals.

You don't need to be a maintainer to propose a patch. You don't need to know every architectural tradeoff or historical decision. What you need is the understanding of the vulnerability's root cause, which you already have if you've done the work to prove it's exploitable. Your patch doesn't need to be perfect, just a good-faith starting point that shows you've thought through the fix.

More importantly, you possess privileged knowledge, an intimate map of the system's internals that few others have. The maintainer may know the broader architecture, but you know this specific vulnerability's execution path better than anyone. With that privilege comes responsibility: finish what you started. Don't just document the vulnerability and walk away, leaving maintainers to reverse-engineer your understanding from a bug report.

If you can climb Everest to prove a vulnerability exists, you can help build the guardrails.

For professional security researchers, proposing a patch is now the cost of being heard. For casual reporters, it's the minimum bar for responsible disclosure. In both cases, it serves as a natural filter that separates signal from noise.

Not all bugs need patching, let patchers vote with their feet

🌶️ In a world where AI can trivially discover many potential vulnerabilities while the volunteer capacity to patch them remains finite, not every bug requires immediate action, and treating them all as equally urgent is itself a security failure.

If someone merely runs an AI vulnerability scanner against a public codebase like FFmpeg and opens bug reports for everything it finds, that's functionally no different from telling the FFmpeg maintainers about the AI vulnerability scanner and asking them to run it. We're just outsourcing the work of running a tool, with no thought given to filtering the noise produced by that tool.

The reality is that vulnerability scanners, especially AI-powered ones, produce a lot of false positives and low-priority findings. Professional security researchers understand this and filter their reports accordingly. They invest time determining which findings matter before creating noise for maintainers. Casual reporters often skip this crucial step.

When we raise the bar that reporters should help with patching, we create a natural filter. When you ask reporters to help patch, they naturally self-filter based on what they think is actually worth fixing. Patchers vote with their feet.

When patchers vote with their feet, the patches should come from the following groups:

-

Firstly, users who are personally affected. If you're running FFmpeg in production and this bug is breaking your video pipeline, you're motivated to fix it because it's blocking your actual work. Your willingness to dig into the codebase and propose a fix is a strong signal that this bug matters in the real world, not just in theory.

-

Secondly, security researchers who can prove the bug is genuinely dangerous. Not just "theoretically this could be exploited under really, really specific circumstances," but someone who has built a working PoC, who's gone to the ends of Earth to demonstrate that yes, this condition can be triggered reliably, and here's the exploit chain that makes it practical.

A bug that's theoretically vulnerable but that no human is willing to invest time proving is exploitable and fixing is, in practice, low priority.

So, let the patchers vote with their feet. Not all bugs need fixing, and the bugs that truly matter shall get fixed.

Maintainers should integrate AI vulnerability scanners in their CI/CD pipelines

I have been framing maintainers thus far as passive recipients, as though they are merely reacting to bug reports, and as though the entire burden of security research falls on the shoulders of security researchers.

But I believe maintainers can take proactive control as well, by integrating AI vulnerability scanners directly into their development workflow.

In large companies, a common practice is that code changes aren't approved until they pass CI/CD checks. These checks confirm that the code doesn't break pre-existing functionality, follows style guidelines, passes security scans, and meets performance benchmarks.

I see a strong argument for open-source projects to adopt a similar approach with AI vulnerability scanners. Before merging significant changes,

- Maintainers run an AI scanner on their code and review the output.

- They address the issues they believe are high priority, and they maintain a public list of false positives and "wontfix" items, which are bugs they've reviewed and decided don't warrant fixing, either because they're not exploitable in realistic scenarios, affect deprecated code paths, or would require architectural changes disproportionate to the risk.

- External reporters should check this list before submitting. If the issue is already documented as a known false positive or wontfix, the report adds no value and only creates noise.

Maintainers are not just passive recipients of to whatever reports are thrown at them, but proactively managing their security posture and communicating their decisions.

Going Forward

What happens when AI scales our ability to find problems faster than our ability to solve them? My answer is that we need to scale our sense of responsibility alongside our tools.

Finding a vulnerability is only the beginning of the work, not the end of it.

We're stewards of the systems we depend on. As those systems become easier to scan and harder to maintain, that stewardship means showing up not just with bug reports, but with patches, with context, and with respect for the humans on the other side. That's the identity shift I think we need in the AI era.

The people who truly understand systems aren't just the ones who can find vulnerabilities, but the ones who can find them and help remediate them and consider the human cost of their discovery. Not just "I can find bugs with AI," but "I can find bugs with AI and I'm willing to help fix them in ways that don't burn out the people maintaining our infrastructure."

I think back to those questions I learned to ask in my first job out of college, the ones that shaped how I think about the dependencies I bring in: "How responsive are the maintainers? Do we have neighborhood support for this technology? Who can I hand this project off to?"

Those questions treated maintainer responsiveness as a product I could shop for.

But I'm realizing now that the volunteers behind the dependencies I'm vetting, the ones I'm scrutinizing for responsiveness, are asking themselves the same questions about their dependencies, about their capacity, about who they can hand things off to when they need to step away.

We're all in the same boat, just at different points in the dependency chain. The difference is that some of us get paid to ask these questions as part of our jobs, while others are answering them in their spare time, for free, as volunteers for software that powers half the internet.

If I want responsive maintainers for the software I depend on, then I need to be part of making that responsiveness sustainable. That's the loop I'm trying to close in my own thinking.

Sources:

Sources: